Natural language processing (NLP) is a field of computer science that deals with the interaction between computers and human (natural) languages. It is a subfield of artificial intelligence that deals with the understanding, interpretation, and generation of human language.

NLP has a wide range of applications, including machine translation, speech recognition, text summarization, sentiment analysis, and question-answering. In recent years, there has been a significant advance in NLP techniques, thanks to the development of deep learning models.

Recent Advances in NLP

In recent years, there have been significant advances in NLP techniques, thanks to the development of deep learning models. Deep learning models can learn complex patterns in data, which has made it possible to develop NLP systems that are more accurate and reliable than ever before.

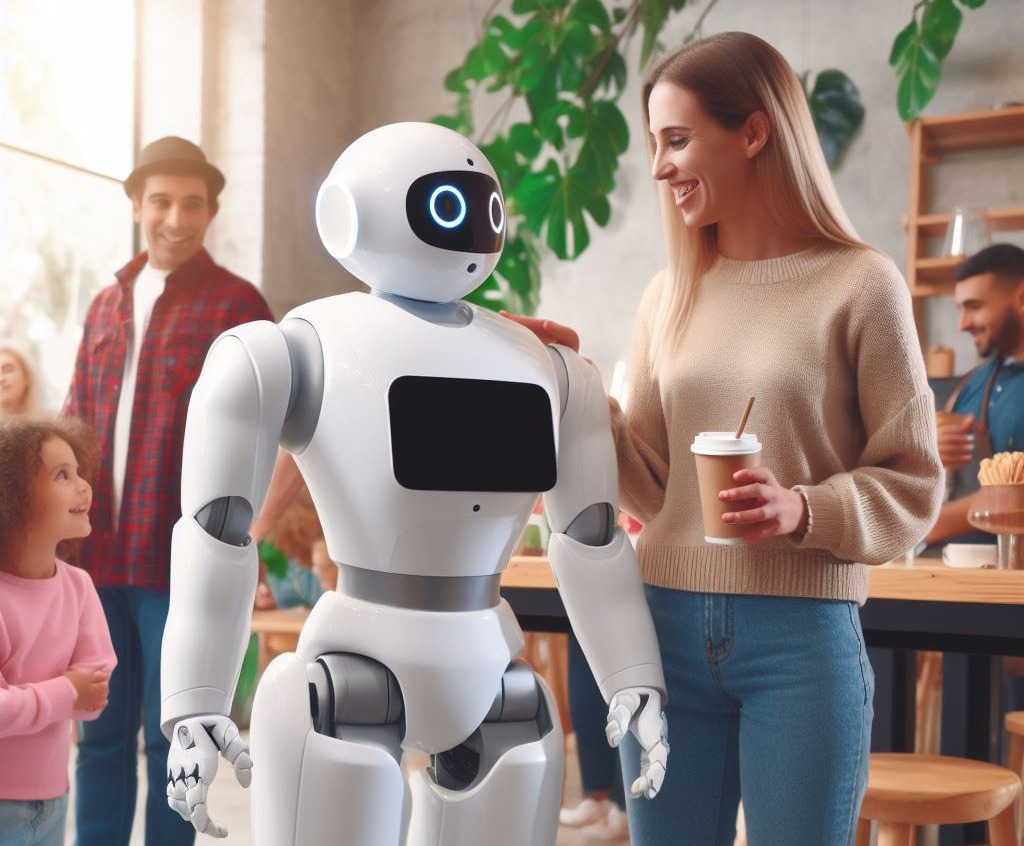

As NLP technology continues to improve, it will have a profound impact on the way we interact with computers. For example, NLP-powered chatbots are already being used to provide customer service, answer questions, and even write creative content. In the future, we can expect to see NLP used in even more ways, such as to help us with our work, our education, and our personal lives.

One article from Harvard Business Review discusses how natural language processing (NLP) tools have advanced in recent years, changing common notions of what this technology can do. The most visible advances have been in NLP, the branch of AI focused on how computers can process language as humans do. It has been used to write an article for The Guardian, and AI-authored blog posts have gone viral — feats that weren’t possible a few years ago.

Another article from Dataquest discusses using machine learning and natural language processing tools for text analysis. They try multiple packages to enhance their text analysis, including sentiment analysis, keyword extraction, topic modelling, K-means clustering and concordance query answering model.

In this article, we will discuss some of the advanced NLP techniques that are used for text analysis.

Named Entity Recognition (NER)

Named entity recognition (NER) is the task of identifying named entities in text. Named entities are typically people, organizations, locations, dates, and times. NER is a challenging task, as it requires the model to understand the context of the text to identify the correct entities.

There are several different NER algorithms, including rule-based, statistical, and neural network-based algorithms. Rule-based NER algorithms use a set of rules to identify named entities. Statistical NER algorithms use statistical methods to identify named entities. Neural network-based NER algorithms use neural networks to identify named entities.

Sentiment Analysis

Sentiment analysis is the task of identifying the sentiment of a piece of text. Sentiment can be positive, negative, or neutral. Sentiment analysis is a challenging task, as it requires the model to understand the context of the text to identify the correct sentiment.

There are several different sentiment analysis algorithms, including rule-based, machine learning, and deep-learning algorithms. Rule-based sentiment analysis algorithms use a set of rules to identify the sentiment of a piece of text. Machine learning sentiment analysis algorithms use machine learning methods to identify the sentiment of a piece of text. Deep learning sentiment analysis algorithms use deep learning methods to identify the sentiment of a piece of text.

Semantic Search

Semantic search is a type of search that considers the meaning of the words in a query. This contrasts with traditional keyword search, which only considers the literal meaning of the words in a query.

Semantic search is more challenging than keyword search because it requires the ability to understand the meaning of the text. This can be done using a variety of techniques, such as natural language processing, machine learning, and knowledge graphs.

Speech Recognition

Speech recognition is the task of converting spoken language into text. Speech recognition is used in a variety of applications, such as voice-activated assistants, dictation software, and call centres.

There are two main approaches to speech recognition: acoustic modelling and language modelling. Acoustic modelling is the task of estimating the probability of a sequence of phonemes given an audio signal. Language modelling is the task of estimating the probability of a sequence of words given a previous sequence of words.

Text Summarization

Text summarization is the task of generating a shorter version of a piece of text while preserving the most valuable information. Text summarization is a challenging task, as it requires the model to understand the content of the text and to identify the most valuable information.

There are several different text summarization algorithms, including extractive and abstractive summarization algorithms. Extractive summarization algorithms extract the most important sentences from the text to create a summary. Abstractive summarization algorithms generate a summary by paraphrasing the text.

Topic Modeling

Topic modelling is the task of identifying the topics in a piece of text. Topics are groups of words that are related to each other. Topic modelling is a challenging task, as it requires the model to understand the content of the text and to identify the topics that are present in the text.

There are several different topic-modelling algorithms, including latent Dirichlet allocation (LDA), latent semantic analysis (LSA), and probabilistic latent semantic analysis (PLSA). LDA is a generative probabilistic model that is used to identify topics in a document. LSA is a statistical method that is used to identify latent semantic structures in a document. PLSA is a probabilistic model that is used to identify latent semantic structures in a document.

Conclusion

These techniques can be used to solve a wide range of NLP problems. For example, tokenization can be used to break down a piece of text into words, which can then be used to calculate the TF-IDF of each word. This information can then be used to identify the most important keywords in the document.

Neural networks are a powerful tool for NLP, and they have been used to achieve state-of-the-art results on a variety of tasks, including machine translation, speech recognition, and text summarization.

NLP is a rapidly growing field, and new techniques are being developed all the time. As NLP technology continues to improve, it will have an even greater impact on our lives. For example, NLP-powered chatbots are already being used to provide customer service, and they are likely to become even more common in the future.

NLP is also being used to develop new educational tools, such as interactive textbooks and adaptive learning systems. These tools can help students learn more effectively by providing them with personalized instruction and feedback.

As NLP technology continues to evolve, it will have a profound impact on the way we interact with computers and the world around us.